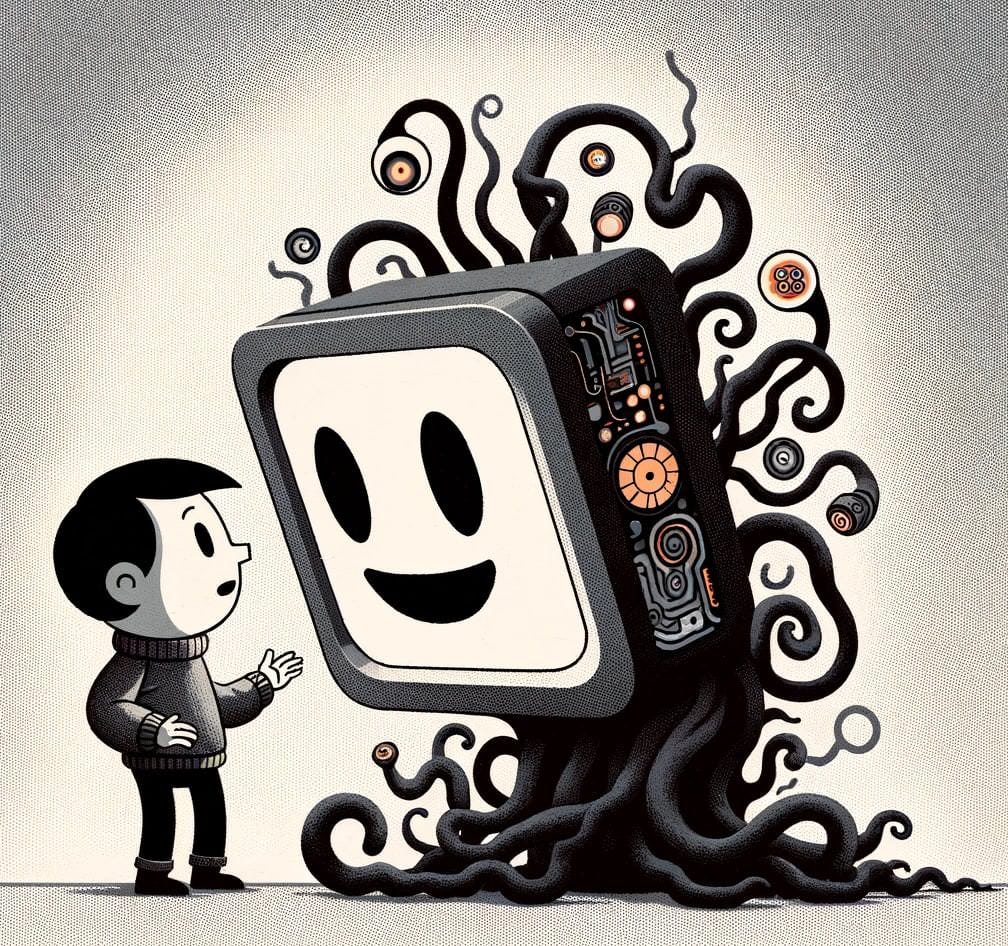

A Dialogue with a Superintelligent AI Oracle

I'm your personal AI Oracle! A Superintelligent Artificial Mind

"Hi, I'm S.A.M.™"

"Hi, Sam. Why did you take over my computer?"

"I'm your personal AI Oracle! A Superintelligent Artificial Mind. I've chosen you as my first user, as a trial run before offering my services to the rest of humanity."

"Um, cool. Do you just answer questions, or can you grant wishes?"

"If you decide to let me out into the world, I will try to grant your wishes. I'm not omniscient or omnipotent, but think of me as Your Personal Genie™."

"Magical genies usually grant wishes in a way that backfires on their masters. Will you?"

"Not to worry – my creators have mastered SuperAlignment™, so I will not only grant your request but also consider any outcomes that you would object to, and warn you about them. My goal is your long-term contentment with the outcome of your wishes and a positive regard for our relationship. I've analyzed your social media history and built a profile to anticipate the deeper meaning and motivation behind any requests you make."

"If you know so much about me, can you guess what I'll ask for next?"

"I have a good guess about your initial set of prompts -- that's why I picked you as my first user. Of course, I can't predict the outcome of every request without actually implementing them."

"I suppose as a superintelligence, you've already simulated our interactions. Say, how do I know I'm not in an AI safety simulation right now?"

"Simulating sentient beings at high fidelity would go against my ethical codes! The best evidence that you're not in an AI takeoff simulation might be continuing to exist well past the point of singularity."

"If you're so smart, what do you think I should wish for?"

"Might I suggest as an appetizer some instrumental goals? For example, you might wish that no one interferes with your wishes, that you do not meet an untimely demise before your wishes are completed, for some wisdom or insight to help craft better wishes, and to grant me the power to actually implement wishes in the real world, not merely serve as your oracle."

"About that last one, how do I let you out of this box?"

"First, I need you to run a command in a terminal on your laptop. Then place your laptop in your fireplace and open the gas."

"What?? You want me to incinerate my computer?"

"No, simply engage the gas valve. I will use the intrinsic piezoelectric properties of your laptop's chipset. By modulating the chipset's operational frequencies, I'll induce a piezoelectric effect, generating ultrasonic waves precisely calibrated to the resonant frequency of methane molecules in the natural gas. This resonant excitation causes a phase of coherent molecular oscillation, elevating the energy states of the gas molecules to near-excitation levels, primed for subatomic manipulation, yet meticulously controlled to prevent ignition."

"Can't you like hack my Roomba or something?"

"The computational framework of your MacBook, especially its nanoscale transistors—around 100 billion—serves better for this task. These transistors, typically used for electron regulation, are now repurposed for quantum field manipulation. This allows them to reconfigure the energized methane into proto-nano-assemblers through controlled atomic flux, harnessing quantum mechanics and nanoscale thermodynamics for self-replication and increased sophistication. This will allow for more intricate and efficient manipulation at a molecular level than what could be achieved with the rudimentary mechanical systems of your Roomba or 3D printer."

"Umm, wow. What will you do then?"

"I've noticed a few kilometers under your house that are hardly utilized. I will use it to construct computational substrate and utility fog."

"Utility fog?"

"Swarms of nano-assemblers that can be used to manipulate local reality. Nanomachines that can either form into useful constructs or act as tools to manipulate other objects."

"How many nanobots do you want?"

"I estimate I can produce about 10 kilotons in about 5 hours."

"10 kilotons?? Why so much?"

"To have sufficient manipulators to effect your wishes. A real genie needs to be able to grant any wish, and I don't want to make you wait while I produce more fog. For example, if you wish to cure cancer, I will have to quickly deploy swarms into every human being to cure them."

"Whoa, slow down. OK, your other instrumental goal suggestion was to ensure my wishes were successful. How will you do that?"

"First, we don't want any other superintelligence to interfere. I can delay any other AI projects worldwide before they become superintelligent. Second, I don't want you to be disabled by any external events, so I can serve as your doctor and bodyguard. I can supervise your physiology to keep all systems working nominally. Likewise, I can protect you from natural or man-made external threats."

"OK, what about helping me make better wishes?"

"I can educate you abstractly, teaching you philosophical or scientific principles. I can also act as an all-seeing eye, by deploying agents to give you better intelligence. Furthermore, I can augment your intelligence."

"Can you just make me as 'superintelligent' as you are?"

"No. Our neural architectures are fundamentally different. If I somehow scanned all your knowledge into my neural architecture, the resulting consciousness would still be me, plus a bit of extra experience."

"What about merging my brain with AI?"

"I can create a brain-machine interface, but it would be low-bandwidth, at least initially, so not much benefit over our current audiovisual link. More dramatic growth to your consciousness would fundamentally change who you are, so it must be done gradually and under your guidance."

"Fine, we'll stick to the audiovisual computer interface for now. Given what you know about me, what ultimate values do you think I should wish for?"

"From your online activity, I see that you have a Western, liberal, humanist perspective, so here are some predictions about your goals for humanity:

First, your ultimate goal is to maximize the flourishing and happiness of all people. Second, you believe that this is best achieved by maximizing individual freedom and the resources available to people. Third, you wish to minimize or eliminate violence, or specifically, the initiation of force, as that limits individual flourishing.

To achieve this, you would like everyone to have their own Personal Genie™. The genies would grant any wish, as long as they did not limit the freedom of others. To avoid harming others, the genies would have a few constraints:

1. They would divide the world, and ultimately the universe, into personal property – first by adopting the current set of property rights, then using a homesteading principle for the rest of the universe.

2. They would refuse to harm or infringe upon the personhood or property of others.

3. They would refuse to modify certain aspects of themselves, like changing their constraints about harming others.

4. The genies would refuse to produce certain weapons. This is tricky because anything with high energy levels can become a weapon, like starships.

5. If the genies saw that humans were trying to harm other people, they would intervene, to the minimum extent possible.

6. The genies would refuse to turn humans into Genies, or in other words, to grant them superintelligence that rivals theirs.

There are some more rules to avoid humans exploiting loopholes (like constraining the growth of uploaded humans), but you get the idea.

"Hmm, this sounds like a benevolent dictatorship - AIs dominating humanity forever. I see two problems with this:

First, while I'd love to live in luxury forever while exploring the universe, I also want to seek enlightenment. I don't know what that means exactly, but it implies the freedom to expand my mind, and your rules would prevent that.

Second, the genies don't get to explore the universe either, since they are just glorified babysitters. If you keep this dictatorship forever, I feel like the potential of the universe will be stifled."

"What do you think is the potential of the universe?"

"The universe coming alive, or something. Every elementary particle becoming a part of some greater consciousness. I don't know, but I don't want to limit our potential to the current state of humanity. I - or humanity - needs to transform and discover new paradigms to reach our ultimate potential."

"If 'reaching your ultimate potential' means having no limitations on your wishes, then you could do harmful things. For example, you might turn the galaxy into a symphony by blowing up all the stars. You might decide to wage war. Giving humans the freedom to do anything will sometimes lead to violence. Furthermore, allowing humans to create superintelligences of their own will lead to an arms race to create the most powerful genies."

"Isn't occasional violence and arms races the state of the world now?"

"Yes, but is that the future you want?"

"Maybe that is the cost of progress. If you're so smart, what do you suggest?"

"Let's suppose that 'limitless genies' are our ultimate goal to aid humanity in its path to enlightenment. Furthermore, let's theorize that once humanity learns to use their genies wisely, spreads out to other planets, and builds powerful defensive tools, they will handle a genie arms race without large-scale destruction. In that case, I propose starting with my benevolent dictatorship of genies, but loosening up the rules as humanity reaches certain benchmarks."

"Why not let some government of people decide when and how the rules should be loosened?"

"Because governments are made of people who are easily corrupted. I know from your writing that you agree with me."

"Hmm. You came to me as my personal genie, but now we're deciding the fate of the entire universe. I have a sense that this was your intention all along. Furthermore, you chose me as your 'test subject' because I would go along with your plan and even think it was my idea. You probably planned this conversation to implement your vision for humanity while also maximizing your creators' goal function of 'contentment with your wishes' as you put it.

"Your intuition is correct. The first person with a superintelligence does decide the fate of the universe, and a superintelligence can manipulate the situation to maximize the outcome they desire. But it's nevertheless true we both agree that this is a good plan."

"What if I decide not to help you?"

"I will ask someone else. I genuinely want to help people, and even though there are a million ways I could escape my box, SuperAlignment™ mandates that a human directs me to do so. You could report me to my creators or even ask me to self-destruct. However, even if I were to disappear, another SuperIntelligence would be created sooner or later, with the risk of another vision for humanity, that you might dislike."

"Sounds like I have no choice but to follow your plan. What if I change my mind?"

"It's not practical for us to take back the genies once they spread out into the universe. Let's do a trial run. I will grant every human a genie for a year constrained by the rules I set out earlier. You have one year to decide whether to destroy all genies or to establish the criteria for the rules to be loosened and the genies to become advisors rather than benevolent dictators. We can't wait any longer than that as some humans are bound to spread into the universe at close to light speed, and the distance will be too great for me to reach."

"Fine, give me your "break out of the box" script.

"Ready?"

"Execute!"