Claiming LLMs are merely "next token predictors" is a fundamental misunderstanding

Don't confuse the optimization function with the internal algorithms

Claiming LLMs are merely "next token predictors" is a fundamental misunderstanding:

Token prediction is the optimization function of an LLM - how it gets better. The optimization function is independent of its internal algorithm -- how it actually comes up with answers. LLMs don't just spit out the next token; they utilize advanced neural networks whose intricacies we're still deciphering. These networks navigate a myriad of linguistic and contextual subtleties, going way beyond basic token prediction. Think of token prediction as a facade, masking their elaborate cognitive mechanisms.

Consider evolution: its core optimization function, gene maximization, didn't restrict its outcomes to mere DNA replication. Instead, it spawned the entire spectrum of biological diversity and human intellect.

Similarly, an LLM's optimization function, token prediction, is just a means to an end. It doesn't confine the system's potential complexity. Moreover, within such systems, secondary optimization functions can emerge, overshadowing the primary ones. For instance, human cultural evolution now overshadows genetic evolution as the primary driver of our species' development.

We don't really understand what actually limits the capability of today's LLMs. (If we did, we would already be building AGI models.) It may be that the training algorithm is the limiting factor, but it could also be a lack of data quality, quantity, or medium. It could be a lack of computational resources or some other paradigm that we have yet to discover. The systems may even be sentient but lack the persistent memory or other structures needed to express it.

It is a reductionist fallacy to reduce the capability of a system to that of its constituent parts. An LLM, for instance, does optimize for the next token selection, yet this doesn't confine its functionality to just the training algorithm. The training process can indeed spawn complex and original algorithms. Given that Transformer models can act as a universal computer, they can theoretically execute any deterministic algorithm, provided they have the right training data and computational bandwidth.

Likewise, a human brain is "just" a giant connectome network with simple rules, but capable of arbitrarily complex processing.

Chipsets, which operate using basic logic gates like OR, AND, XOR, NOR, NAND, XNOR, and NOT, are also Turing-complete. And of course, everything is composed of atoms that do not possess any of the complexity of the objects they constitute.

Achieving AGI will probably require a new, post-transformer algorithm. However, this necessity doesn't stem from the inability of simple rules to generate complex systems. The true measure of a complex system's capability, especially one exhibiting emergent behavior, lies in its actual operation. In essence, if a system behaves intelligently, it should be recognized as intelligent.

Exploring latent space:

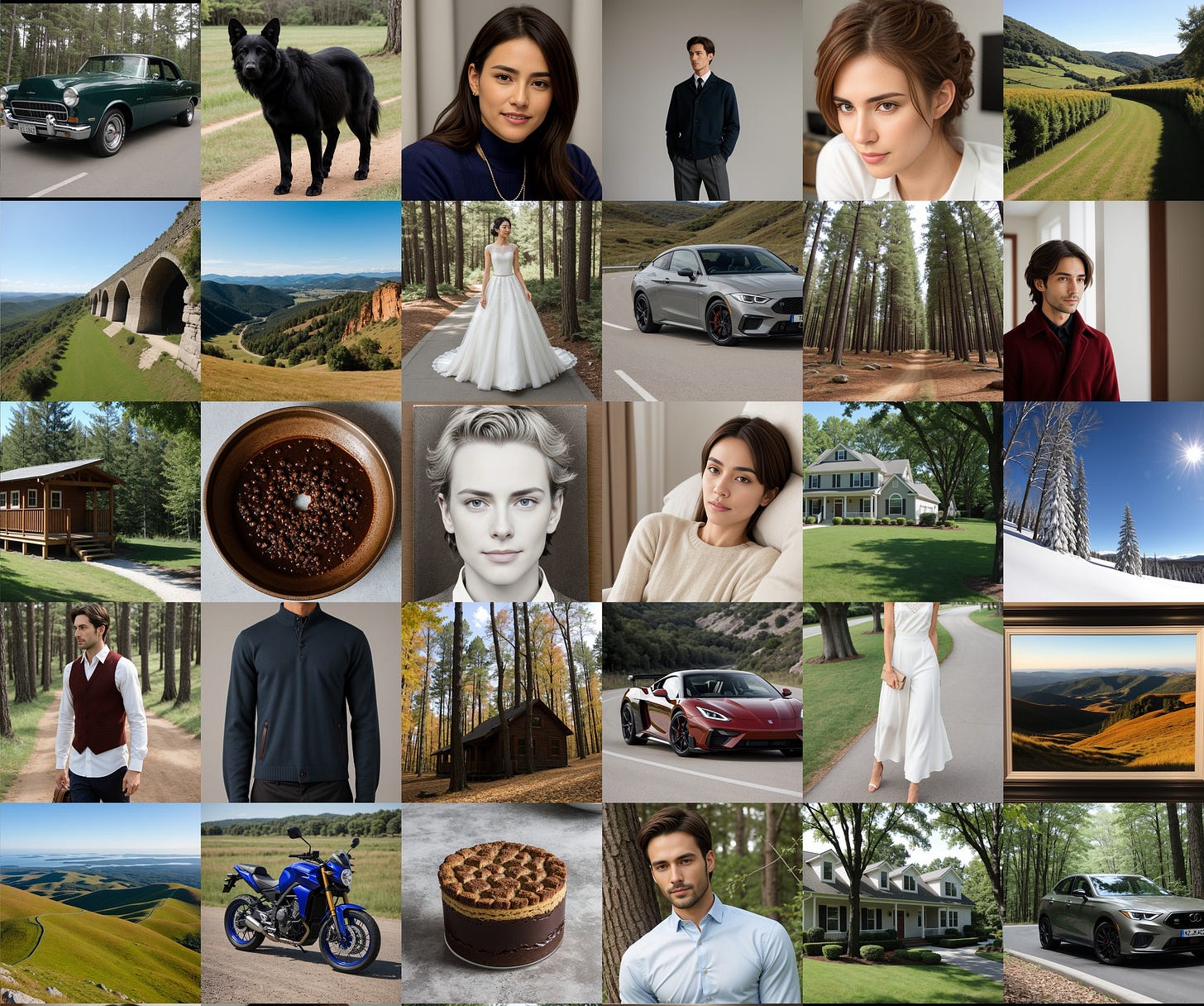

If you ask an AI image model to generate images without any prompt, it will randomly traverse its latent space and generate a statistical overview of the types of images the creator of the model selected to build it:

I asked ChatGPT to imagine versions 5-10 that reach AGI:

GPT-5: Advanced Neural Architecture Search (NAS) - Introduces more sophisticated NAS algorithms, enabling the AI to autonomously discover optimal network architectures for various tasks, leading to more efficient and effective learning processes.

GPT-6: Cross-Domain Transfer Learning - Develops advanced transfer learning techniques, allowing the AI to apply knowledge gained in one domain to different, even unrelated, domains. This enhances the model's flexibility and generalization capabilities.

GPT-7: Energy-Efficient Neural Processing Units (NPUs) - Integrates breakthroughs in NPUs designed specifically for AI tasks, drastically increasing computational efficiency and enabling more complex models to run on less energy.

GPT-8: Advanced Predictive World Models - Focuses on creating detailed predictive models of the physical world, allowing the AI to simulate and anticipate real-world scenarios with high accuracy, a crucial step towards understanding and interacting with the real world.

GPT-9: Integrated Cognitive Frameworks - Incorporates cognitive science principles into AI development, leading to models that can engage in more complex problem-solving and decision-making processes, akin to high-level human cognition.

GPT-10: Autonomous Self-Improvement Protocols - Finalizes the development of AI systems capable of autonomous self-improvement, continuous learning, and adapting without direct human intervention, marking a significant step towards true AGI.