I am trying to understand the root of the disagreement between AI skeptics and AI capability optimists:

My basic thesis is this:

If a software system can reproduce the function of a biological brain on any scale, then creating a generally intelligent human-level system is mainly a matter of scaling. The brain is composed of various modules or regions that are specialized for processing specific types of information. We’ve been able to simulate some of these regions in software, so it’s likely that we’ll be able to scale this approach to all other capabilities. Given the history of computing, it is very likely that AI systems will continue to scale at an exponential rate. Therefore, as soon as I was convinced that AI systems were reproducing some aspect of human reasoning, I became convinced of a timeline to human-level reasoning -- and beyond.

To use an analogy: as soon as the Wright brothers made the first heavier-than-air flight, it was reasonable to conclude that airplanes would eventually be competitive with any bird, and even go far beyond.

We should judge technologies on their ultimate ability, not their initial capabilities. But what is the ultimate ability of AI? Well, it is likely to be at least genius human level, since human geniuses already exist. Quite likely the limits are well beyond the best humans, given that artificial systems are not constrained by evolution.

What will it take to reach AGI?

The human brain is a complex network of approximately 86 billion neurons, forming intricate connections and firing patterns that give rise to intelligence, consciousness, and the myriad capabilities of the human mind. While artificial intelligence has made remarkable progress in recent years, achieving human-level intelligence – often referred to as Artificial General Intelligence (AGI) – remains a daunting challenge.

One of the key reasons for the difficulty in creating AGI is the fundamental difference between computer architecture and neural architecture. Traditional computer systems are based on rigid, predefined rules and algorithms, whereas the brain is a highly adaptive, self-organizing system that learns and evolves over time. This discrepancy has led to slower-than-expected progress in AI and has contributed to public pessimism about the prospects of achieving AGI.

The brain, while vastly complex, is still a computational system that follows the laws of physics and can be modeled in software. This understanding has been validated through research in computational neuroscience, where simulations of neural networks have successfully reproduced various aspects of biological intelligence, from sensory processing to decision-making.

To create AGI, three key ingredients are necessary: (1) sufficiently large and sophisticated neural networks, (2) effective training algorithms that can guide these networks towards intelligent behavior, and (3) rich, diverse training data that captures the breadth of human knowledge and experience.

1. Large-Scale Neural Networks:

Recent advances in AI, particularly in the domain of large language models (LLMs) like GPT-4, have demonstrated the potential of massive neural networks. With hundreds of billions to trillions of parameters, these models have computational power that rivals or even exceeds that of the human brain. While the exact correspondence between parameters and neurons is not clear, because we can recreate the functions of simple systems, we can conclude that the computational power of existing models already exceed the human brain. The future challenge is to make these systems cheaply available at scale.

2. Training Algorithms and Cognitive Architectures:

The success of AGI will depend not just on the size of neural networks, but also on the algorithms used to train them. While the cognitive architectures of current AI systems differ significantly from those of humans, there are important similarities in the way both systems learn and adapt. In particular, both biological and artificial neural networks are shaped by iterative processes of learning and selection, where successful patterns and behaviors are reinforced over time.

From this, we can conclude that (1) right kind of selection pressure will lead to synthetic intelligence, (2) it’s not necessary to reverse-engineer human reasoning, but only to understand the kind of selection pressure that enables intelligence to emerge..

3. Diverse and Comprehensive Training Data:

The quality and diversity of training data will be essential for creating AGI systems that can match the breadth and depth of human intelligence. While current AI systems are often trained on large datasets of text, images, or other digital content, this data only captures a fraction of the information and experiences that shape human intelligence.

We don’t yet know if intelligence requires the full spectrum of human knowledge, skills, and experiences in an embodied form. Humans develop on a much smaller body of knowledge than LLMs — my guess is that better training algorithms will reduce the need for large data models, and enable models to learn from real-time streaming data, much like humans do.

Conclusion:

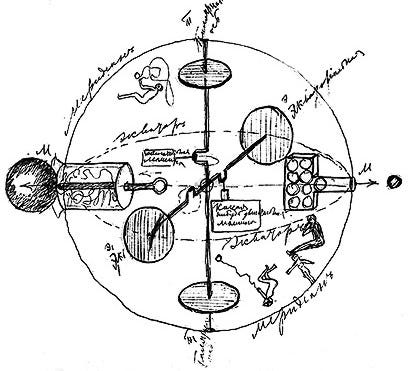

66 years separated the first successful plane flights and moon landings. Was it possible to predict the moon landing upon hearing about that first flight? In, 1903, Konstantin Tsiolkovsky (Константин Циолковский) published his famous rocket equation. He drew a space ship that features the basic operating principles of modern spaceflight in 1883. Rocket scientists theorized and experimentally validated the core principles of spaceflight several decades before it was achieved, giving space programs the confidence to invest in their development.

Yes, if the operating principle of a technology can be experimentally validated, we can have confidence that scaling up will result in successful implementation. This is how all radical new technologies work: the steam engine, the gasoline automobile, the airplane, nuclear weapons, and soon, artificial general intelligence.